Are You Evaluating Like It’s 2015?

How confident are you that your training evaluation strategy is better than what artificial intelligence (AI) can generate? Have you been updating your training evaluation strategy along with your program materials as technology advances? If not, here is some quick guidance to make your training evaluation key to accomplishing your goals, not just checking a box.

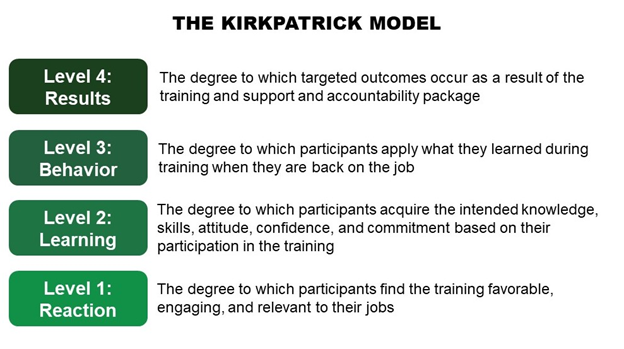

Use The Kirkpatrick Model

The Kirkpatrick Model is the worldwide standard for leveraging and validating talent investments. When used correctly, it cannot fail to deliver good program outcomes. Most training is evaluated with the Kirkpatrick Model at least partially, but not necessarily correctly.

Ensure Your Evaluation Provides Value

Many organizations still cling to an outdated, one-dimensional evaluation approach which yields little benefit. Each level in the Kirkpatrick Model is evaluated about the same way for each program, whether big or small, critically important or just routine. The tools employed are selected because they are easy to use, and sometimes collect primarily numeric data only.

Here is an example of a traditional evaluation approach that yields little useful information or organizational benefit:

Ineffective Traditional Evaluation Approach

- Level 1 Reaction: Post-program survey

- Level 2 Learning: Testing

- Level 3 Behavior: Delayed survey or none

- Level 4 Results: None

It does not have to be this way. Especially for your most important initiatives, there is a newer and better way.

Upgrade to a Blended Evaluation Plan®

Most training professionals have heard of blended learning. It revolutionized the training industry in 1999 by moving learning from a one-dimensional in-person event to a hybrid approach including multiple modalities, usually including the use of technology.

In much the same way, the Blended Evaluation Plan® revolutionized training evaluation in 2015. A Blended Evaluation Plan is a methodology in which data are collected from multiple sources using multiple methods, in a blended fashion that considers all four Kirkpatrick levels.

Blended Evaluation Plan®

A methodology in which data are collected from multiple sources using multiple methods, in a blended fashion that considers all four Kirkpatrick levels

However, not every organization has kept up with the times and implemented this more effective approach. The deficiencies in the traditional approach are resolved with a Blended Evaluation Plan.

Blended Evaluation Plan Benefits

Using a Blended Evaluation Plan supports the accomplishment of training and organizational goals. Instead of waiting some time after training and measuring what happened, a Blended Evaluation Plan includes methods to monitor progress, so shortcomings are identified and resolved, and small successes are highlighted and shared. In this way, the Blended Evaluation Plan maximizes program performance and results.

You may be thinking, this sounds difficult and expensive. It isn’t. A Blended Evaluation Plan is purposeful in its design; based on information required to make good training decisions (usefulness) and provide the data required by stakeholders to fund it (credibility). Training evaluation resources are focused where they will create the most benefit.

It is a common misconception that meaningful training evaluation requires more resources. This is not necessarily true, because Blended Evaluation Plans often streamline institutionalized evaluation practices at Level 1 Reaction and Level 2 Learning and replace them with more practical and flexible methods based on the importance of the program. This saves resources to invest in the most important programs and at the most important Kirkpatrick level, Level 3 Behavior.

Get Started With A Blended Evaluation Plan

Here are some simple ways to get started with a Blended Evaluation Plan.

1. Review your evaluation practices

Use the Kirkpatrick Model to review what evaluation is typically being conducted at each of the four levels for the training and programs you conduct. Do not be discouraged if you find that little or no evaluation is occurring at levels 3 and 4. This is why you are doing this audit.

2. Rework your evaluation approach

Define what training evaluation data is useful to the training department for continual improvement, and to stakeholders to justify program investments. Typically, data at Levels 1 and 2 is useful to the training group, and data at Levels 3 and 4 is what is valued by stakeholders. See if you can streamline what you do at Levels 1 and 2 to save resources for Levels 3 and 4.

For example, if you conduct a 20-question post-program survey for every program and the data is seldom or ever tabulated or reviewed, consider if you could discontinue or shorten it for non-mission-critical or legacy programs. Instead, review data tracked automatically by your learning management system for computer-based training or ask facilitators to provide their observations for instructor-led training. Think flexible, quick, and easy when it comes to Levels 1 and 2.

3. Create a strong Level 3 library of evaluation methods

Using the resources you save at Kirkpatrick Levels 1 and 2, generate a list of all the ways you can support on-the-job implementation of learnings. Think about ways you can not only measure what happened, but also reinforce the importance of performing new behaviors on the job and encouraging and rewarding them when they occur. Make sure there is also a good system of accountability.

Create a library that includes a variety of evaluation sources, including human and technological options. Remember to consider the efforts of not just the training department, but also the training graduates, their supervisors, stakeholders, and support departments like IT and human resources in your list. Things the training graduates can do themselves to track their progress and support each other on the job are sometimes overlooked.

The result should be a long list of training evaluation and performance support methods, tools, and techniques to consider in a flexible framework for all your training programs.

4. Select a mission-critical program

Finally, select a program that is critically important to your organization to beta test the new Blended Evaluation Plan. It should be one that has a lot invested in it and high expectations for the outcomes. This may seem risky, but an important program receives more attention and therefore you will get a better test of your new evaluation plan. Additionally, the plan has built-in checkpoints, so if the program isn’t going as well as it should, you will be aware of it more quickly and resolve the issues. Your new Blended Evaluation Plan is also likely to be more tailored and geared for success than the plan you replaced.

The Time is Now

Don’t evaluate your training like it’s 2015. Abandon old, ineffective, inflexible plans that overemphasize less important metrics like enjoyment of the training and knowledge retention over time. Use a Blended Evaluation Plan approach to optimize the valuable resources you invest in training and evaluation, and ensure your training delivers the intended results.

Just like artificial intelligence (AI) responds to what we say and do, your Blended Evaluation Plan is flexible for each program and differing stakeholder needs. Use your new library of evaluation resources to create an optimized plan for maximum program results.

About the Author, Wendy Kayser Kirkpatrick

Additional Resources

Read more articles in the Kirkpatrick Blog

Subscribe for free Kirkpatrick white papers, tools, and more

Read Kirkpatrick Four Levels of Training Evaluation

Get Kirkpatrick Certified and learn how to create an implement evaluation plans